Márcio Sarroglia Pinho |

An User Interface Model for Navigation in Virtual Worlds |

Paper published in CLEI'99 - Conferência

Latino-Americana de Informática - Assunción, Paraguay

Abstract:

One of the most complicated tasks when working with three-dimensional virtual worlds is the navigation process. Usually, this process requires the use of buttons and key-sequences and the development of interaction metaphors which frequently makes the interaction process artificial and inefficient. In these environments, very simple tasks, like look upward and downward can became extremely complicated. To overcome these obstacles, this work presents an interaction model for three-dimensional virtual worlds, based on the interpretation of the natural gestures of a real user while he/she is walking in a real world. This model is an example of a non-WIMP(Window,Icon,Menu,Pointer) interface. To test this model we created a device named virtual-bike. With this device, the user can navigate through the virtual environment exactly as if he were riding a real bike.

Keywords: virtual reality, non-WIMP (Windows, Icon, Menu, Pointer) interfaces

Project partially supported by FAPERGS

1. Introduction

With the coming of real-time graphic libraries as OpenGL and Direct3D [WOO 97, WOO 98], the exhibition of three-dimensional synthetic environments that, visually, are very similar to the real ones, have become possible.

When the interaction needs in these virtual environments are small and the main tasks are restricted to the exhibition of objects and its manipulation with rotation and translation operations, the problem seems to be resolved. Besides, when the interaction tasks are limited to the navigation along pre-defined paths, there is still not a problem.

However, when it is necessary to do fast and natural navigation in an environment similar to the real world, some problems appear with the user interface tools available nowadays. In general, this navigation process involves the use of buttons and keys and the creation of interaction metaphors which makes the process not much natural and of reduced efficiency.

In these environments, simple movements as to lower or to elevate the head, to walk to the side or forward, become very complicated and a little unatural. Examples of these are the very well-known navigators Nestcape, Virtus, Internet Explorer, Worldtoolkit and others. This happens, mainly because in this case we are using the traditional WIMP(Window, Icon, Menu, Pointer) interface paradigm, in essentially three-dimensional applications, where the user's body is an important part of the interaction process.

With the advent of virtual reality, the interface forms between human and machine had a great evolution in terms of quality [Pinho 1997]. That increment in the quality of the interface has its explanation in the fact that the virtual reality provided more intuitive manners the users to interact with the systems, without the need of control buttons or other resources for the interaction [Paush 1998, Mine 1995].

In this work we create an interface model that tries to accomplish the

human-computer interaction in a more direct way. The central idea is to map each user's

gestures directly to movements inside the virtual world, without requiring

memorization of metaphors and sequences of buttons to interact in the environment.

1.1 Virtual environments

A virtual environment can be seen as a dynamic three-dimensional scenery, modeled through computer graphics techniques and used to represent the visual part of a virtual reality system. The virtual environment is nothing else than a scenery where the users of a virtual reality system can interact.

An important feature of a virtual environment is to be a dynamic system. In other words, the sceneries are modified in real time as the users interact with then. A virtual environment can be projected to simulate an imaginary environment as close to the real one as possible.

Also denominated "virtual worlds", those environments can be modeled through special tools, in which, the most popular is VRML [Clark 1996, Hardenbergh 1998, Siggraph 1995]. The interaction degree in a virtual environment will be larger or minor depending on adopted interface, besides the devices associated to the system.

1.2 User Interfaces in Virtual Environments

The virtual reality comes to bring a new user interface paradigm. In this paradigm, the user is not more in front of the monitor, but, he must fell inside the interface. With special devices, virtual reality tries to capture the user’s movements (in general arms, hands, and head) and, starting from these data, it tries to accomplish the man-machine interaction. The interface in a Virtual Reality system tries to be similar to the reality, trying to generate the sensation of presence [Slater 1994] in a three-dimensional synthetic environment, through a computer generated illusion. This sensation, also called immersion [Greenhalgh 1997, Dourish 1992, Durlach 1998] is the most important characteristic in Virtual Reality. The quality of this immersion, or the illusion degree, or how real this illusion seems to be, depends on the interactivity and on the degree of realism that the system is capable of providing.

The interactivity [Forsberg 1998] is given by the capacity that the system has to give answers to the user's actions. If the system answers in an instantaneous way, it will generate in the user the feeling that the interface is alive, creating a strong sensation of reality. For this reason, the virtual reality is a computer system that should use real time techniques for the user interaction. The degree of realism, in its turn, is given by the quality of these answers. The more similar to a real scene it is(presenting an image or emitting a sound), the more involved the user will be.

1.3 Interfaces non-WIMP

The non-WIMP interfaces are characterized by involving the user in a continuous and parallel interaction with the computational environment. They are those kind of user interfaces that do not depend on interface objects to be accomplished [Vam Dam 1997, Morrison 1998]. The main idea is to have interfaces that involve the user in a complete way, interpreting all his gestures (head, body, eyes, arms gestures) through special devices. This interpretation should be continuous and parallel and the generation of sensations on the user should reach all his senses, including those ones usually used (vision and audition), but still adding the possibility of generating sensations such as touch and strength, as well as others as cold and heat, for instance.

Today, the most developed form of non-WIMP interfaces are three-dimensional virtual reality environments. In these environments, we have a constant updating of the images presented to the user and, in response to any user movement, an answer can be generated.

2. The Interface Model Proposed

2.1 A model without Navigation Metaphors

Analyzing the problems presented in the previous section, we created an interface model that tries to accomplish the human-computer interaction in a more direct way. The central idea is to map each user's gestures directly to movements inside the virtual world, without requiring memorization of metaphors and sequences of buttons or keys to interact in the environment.

As other studies have already shown, a very generic interface tends to become difficult to use in specific complex applications [Cooper 1997, Schneiderman 1998]. For this reason, we decided to define a model to treat the specific problem of navigation. We did not worry, in the model, with the problem of object manipulation.

2.2 The available movements

By observing a cyclist's movements while he rides through a city, we have identified a group of movements that are usually executed to analyze a place:

- To look to the sides, upward, down or back;

- To approximate the head of an object to observe it in detail;

- To walk forward;

- To stop the movement;

- To change the direction of the movement;

- To increase and to reduce the speed of the movement.

A cyclist was taken as base, however, we could have opted for other movement forms, like walking or driving a car. We chose the cyclist for two reasons: in the case of walking the movements are slow; in the case of driving a car, the possibility of the details analysis (interaction with the environment) is reduced.

2.3 User Interface Actions

Starting from the identification of the user movements in the real world, which we named Goals, we defined a set of movements this user should accomplish on the interface, in order to execute his tasks.

Based on this, the relationships shown on the table 1, were defined.

|

Goal |

User movement on the interface |

| To look to the sides, upward, down or back | To move the head |

| To approximate the head to an object to observe it in detail | To move the head |

| To walk forward | To pedal |

| To stop the displacement | To stop |

| To change the direction of the movement | To rotate the handlebar |

| To increase and to reduce the speed of the movement | To pedal faster or more slowly |

Table 1 – The user's goal versus user movements

3. Testing the User Interface Model

In order to test the proposed model, a navigation device was built based on the interpretation of a bicycle’s movements and on the exhibition of images using a virtual reality HMD (Head Mounted Display). To do this, we adapted a set of sensors on a bicycle and by reading these, we accomplished the movement.

After building the prototype device we developed a group of tests in order to evaluate in practice, if this device makes the navigation easier or not, and if the model can be considered valid for the target application.

In the following sections we present details about the prototype construction and about the tests accomplished.

3.1 Virtual bicycle – The Test-tool for the User Interface Model

The built prototype is a real bicycle that was hanged up on a tripod allowing to pedal it without moving. To capture the movements of the handlebar we attached a potentiometer on it. The speed of the movement was read by a dynamo coupled to the wheel. The movement of the user's head was captured by a position tracker. The prototype’s architecture can be seen in the figure 1.

Figure 1 – The Prototype Architecture

3.1.1 Reading the Bicycle and User’s movements

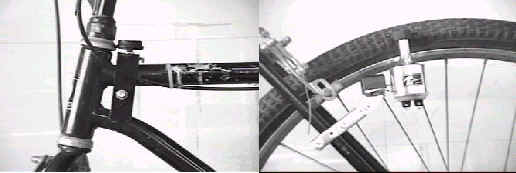

To read the data from the bicycle, two sensors were used. One of them attached to the handlebar(a potentiometer) and the other attached to the bicycle’s wheel(a dynamo) (figure 2).

Figure 2 – Movement sensors attached to the bicycle

The analog/digital signal conversion was done through an equipment called TNG-3 Interface [Mindtel 1999]. The connection of the TNG-3 and the computer is made via serial port.

To accomplish the user's head movements interpretation, we used an ISOTRACK II position tracker, from Polhemus [Polhemus 1999].

3.1.2 Exhibition of the Sceneries

The displayed three-dimensional images try to simulate a city where the user can navigate. For the exhibition of this three-dimensional scenes, the OpenGL graphics library [Woo 1997, Woo 1998] and the virtual reality HMD called I-Glasses [I-Glasses 1999] were used.

The cities used were modeled with an editor specifically created for this task. In figure 3 a user's view can be observed. It is important to notice that there are no controls or menus on the interface, just the three-dimensional images.

Figure 3 – Example of the user's view

3.2 Modeling the Cities

In order to allow the navigation and the execution of detailed tests, we decided to model our own validation sceneries. For this task we created the City Editor [Braum 1998]. This tool allows the fast creation of small cities. They are cities with streets, buildings and trees. These entities were considered enough to give to the user the visual sensation of a city. The Editor allows to save cities in VRML and DXF file formats. Figure 4 shows the Editor's screen and the visualization of a city in a VRML navigator.

Figure 4 – The City Editor

3.3 The Methodology of the Validation Tests

The evaluation of the proposed user interface model was done comparing the results of the use of the model and use of a commercial navigator, like Internet Explorer. In figure 5 a user during a test can be observed.

Figure 5 – User in the Virtual Bicycle

In order to test the proposed model and the built device, some tasks, which the user must execute, were defined [Bowman 1998]. These tasks were the following:

- To walk on a defined path;

- To look for a defined object;

- To recognize the objects of the environment after a navigation;

- To return to a certain point of the city;

- To analyze details in a specific part of the city.

Starting from these tasks some parameters were defined in order to evaluate if the model is useful (or not) to accomplish the tasks.

The tasks and their parameters can be seen on the table 2.

|

Tasks |

Parameters |

| To walk on a defined path; | Total time spent |

| Level of difficulty | |

| To look for a defined object | Total time spent |

| To recognize the objects of the environment after a navigation | Number of recognized objects |

| To return to a certain point of the city | Total time spent |

| To analyze details in a specific part of the city | Level of difficulty |

Table 2 – Tasks and their respective Parameters

To apply the tests a group of 2(two) cities were chosen and it the following tasks were defined:

- Go straight ahead, turn right on the third street and turn left after the green building;

- On the third street on the right there is a white box behind a building. Try to find it;

- Walk on the two streets on the right side of this avenue. (When the user returns we asked him: Do you remember how many trees you have seen ?)

- Try to find the white box of the task b) again;

- Read what is written in the plate in front of that red building.

These tasks were performed by the 16 users in the following way: half of them used the Internet Explorer first and the other half used the Virtual Bicycle first. This was made in order to evaluate if the previous knowledge of the scenery could affect the user's performance.

3.4 The Results of the validation tests

The results obtained in the development of each task are expressed in the following tables.

|

Parameter |

Bicycle |

Navigator |

| Total time spent (on average) | 25 s |

40 s |

| Level of Difficulty | ||

Easy |

25.00% |

18.75% |

Medium |

62.50% |

43.75% |

Difficult |

12.50% |

37.50% |

Table 3 – Task A - "To walk on a defined path"

|

Parameter |

Bicycle |

Navigator |

| Total time spent (on average) | 28 s |

35 s |

Table 4 – Task B - To find for a defined object

|

Parameter |

Bicycle |

Navigator |

| Percentage of successes | 75% |

50% |

Table 5 – Task C - To recognize the objects of the environment after a navigation

|

Parameter |

Bicycle |

Navigator |

| Total time spent (on average) | 20 |

35 |

| Gain in relation to first time | 20.00% |

12.50% |

Table 6 - Task D - To return to a certain point of the city

|

Parameter |

Bicycle |

Navigator |

| Level of Difficulty | ||

Easy |

68.75% |

37.50% |

Medium |

18.75% |

31.25% |

Difficult |

12.50% |

31.25% |

Table 7 – Task E - To analyze details in a specific part of the city

The order in which the interfaces was used did not alter the results of the tests.

4. Conclusions

In a general way the obtained results were quite positive for the proposed model. In most of the cases the developed tool was very superior to the existent commercial navigators. Even for expert users on three-dimensional navigator (7 on 16) the use of the proposed tool was considered very efficient.

Some users noticed that they felt tired and uncomfortable in having to pedal.

Some users found the HMD image quality unsatisfactory. The used resolution is limited to 640x480 by the equipment.

The best results obtained with the user interface model were in the test of "To analyze details in a specific part of the city". This is due mainly to the fact that with the built tool, the user just needs to move the head to look closer to an object, without the need to use key combinations or other artifices.

It was also noticed that the user movements were much softer with the use of the proposed model.

We are now beginning to undertaken studies to treat the problem of direct manipulation of three-dimensional as well objects using virtual reality techniques.

5. References

[Bowman 1998] Bowman, D. Hodges, L. A Methodology for the evaluation of Travel Techniques for Immersive Virtual Environments. GVU. TR98-04. 1995. Disponível em ftp://ftp.cc.gatech.edu/pub/gvu/tr/1998/98-04.pdf

[Braum 1998] Braum, M. Sommer, S. "Ambiente de Edição de Cidade". Disponível em http://grv.inf.pucrs.br

[Cooper 1997] Cooper, A. About face:The essential of user interface design. IDG Books. 1997.

[Clark 1998] Clark, Pete. "The Easy VRML Tutorial". Documento disponível na Internet no endereço http://www.mwu.edu/~pclark/intro.html

[Greenhalgh, 1997] Greenhalgh C. Analyzing movement and world transition in virtual reality teleconferencing. In 5th European Conference of Computer Supported Cooperative Work(ECSCW'97), Lancaster, UK, Kluwer Academic Publishers, 1997.

[Dourish, 1992] Dourish, P. e Bellotti, V. Awareness and Collaboration is Shared Workspaces. In Proceedings of CSCW'92, ACM Press, November, 1992.

[Durlach 1998] Durlach, N., Slater M. Presence in Shared Virtual

Environments and Virtual Togetherness. BT Workshop on Presence in Virtual Environments,

1998. Disponível em:

http://www.cs.ucl.ac.uk/staff/m.slater/BTWorkshop/durlach.html

[Forsberg 1998] Forsberg, A. et alii. Seamless interaction in Virtual Reality. IEEE Computer Graphics and Applications. nov/dec 1997, pp. 6-9.

[Hardenbergh 1998] HARDENBERGH,J. C. - Vrml Frequently Asked Questions, http://www.oki.com/vrml/vrml_faq.html , 1998

[I-Glasses 1999] I-Glasses. I-O Display Systems Inc. http://www.i-glasses.com

[Mindtel 1999] MindTel LLC - Center for Science and Technology . http://www.mindtel.com/mindtel/mindtel.html

[Mine 1995] Mine, M. Virtual Enviroment Interaction Techniques. UNC Chapel Hill Computer Science Technical Report. TR95-018.

[Morrison 1998] Morrison S.A, Jacob, R.J. "A Specification Paradigm for Design and Implementation of Non-WIMP User Interfaces," ACM CHI'98 Human Factors in Computing Systems Conference, pp. 357-358, ACM Press, 1998.

[Paush 1998] Paush, R. "Imersive Environments: research, applications and magic". Siggraph’98. Course notes., 1998.

[Pinho 1997] PINHO, Márcio S e KIRNER, Cláudio. Uma Introdução à Realidade Virtual. Mini-curso. X SIBGRAPI. Campos do Jordão, SP, Outubro, 1997.

[Polhemus 1999] Polhemus Company. http://www.polhemus.com

[Schneiderman, 1998] Schneiderman, B. Design the user Interface: strategies for effective human-computer Interaction. Addison-Wesley. 3rd ed., 1998.

[Siggraph 1995] Siggraph'95 - Course notes http://www.siggraph.org/conferences/siggraph95/siggraph95.html

[Slater 1994] Slater, M; Usoh, M. e Steed A. Depth of Presence in Virtual environments. Presence, 1994, 3:130-144

[Van Dam, 1997] Van Dam, A. Post-WIMP User Interfaces. Communications of the ACM. Vol 40. No. 2, Feb 1997. P 63-67.

[Woo 97] Woo, Mason et al. "OpenGL Programming Guide, The Official Guide to Learning OpenGL", Release 1, 1997.

[Woo 98] Woo, M. "A visual introduction to OpenGl programming". Siggraph’98. Course notes, 1998