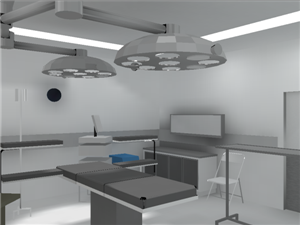

This project aims to test the usage of virtual

enviroments for medical training and education. We are

developping a virtual surgical center where the student will be able to

navigate and interact with the nursery and the patient.

|

|

The area of Medical Qualification in Life Support

training is being constantly improved. However, many problems are

identified in the training process, such as the lack of realism in the

exercises and the low student involvement. In order to qualify the

learning process, the ARLIST project (Augmented Reality for Life

Support Training) is being developed to add computational resources as

sound and images, in the manikins used in the training courses. Through

Augmented Reality techniques, the use of OpenGL and some computational

resources (e.g. projector ad video camera), it is possible to build an

application that defines the images and sounds that should appear in

accordance to the clinical state of the patient.

Related Papers:

Pretto, Fabrício ; MANSSOUR, Isabel Harb ; PINHO,

Márcio Sarroglia . Augmented Reality for Life Support Training.

In: SIBGRAPI - Simpósio Brasileiro de Computação

Gráfica e Processamento de Imagens, 2007, Belo Horizonte. To

appear, 2007. (PDF) |

|

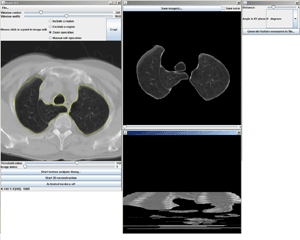

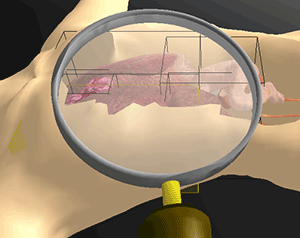

This project is developping a system

to identify interstitial lung tumors using texture analysis.

|

|

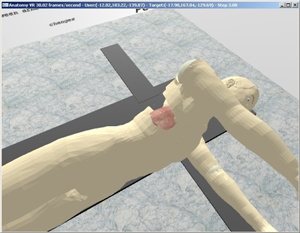

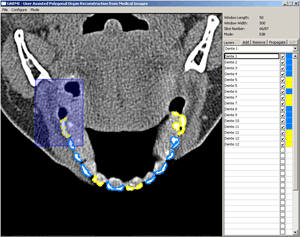

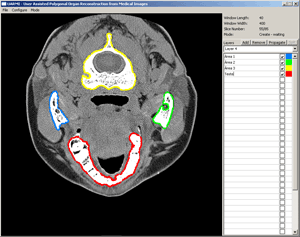

This software aims at making possible a

three-dimensional reconstruction of human body structures, segmented

from medical images (called slices). To this day, it is possible to do

automatic and manual segmentation from DICOM images and generates as

output a 3D file. This file can be loaded in CAD (Computer Aided

Design) systems that are able to create the three-dimensional models

from plane sections.

See

more details here.

|

|

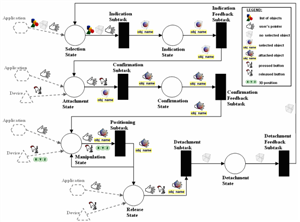

This work presents a methodology to model and to build

3D interaction tasks in virtual environments using Petri nets,

technique-decomposition taxonomy and object-oriented concepts.

Therefore, a set of classes and a graphics library are required to

build an application and to control the net dataflow. Operations can be

developed and represented as Petri Net nodes. These nodes, when linked,

represent the interaction process stages. The integration of these

approaches results in a modular application, based in the Petri Nets

formalism that allows specifying an interaction task, and also to reuse

developed blocks in new virtual environments projects.

See more

details here.

Related Papers:

RIEDER, R., RAPOSO, A.B., PINHO, M.S. Uma Metodologia

Para Especificar Interação 3D utilizando Redes de Petri.

Proceedings of the IX Symposium on Virtual and Augmented Reality.

Petrópolis, RJ: LNCC, 2007. (PDF)

|

|

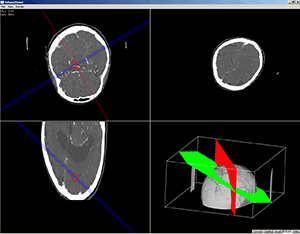

This project has developed a viewer for medical images.

It displays images on 2D and 3D.

|

|

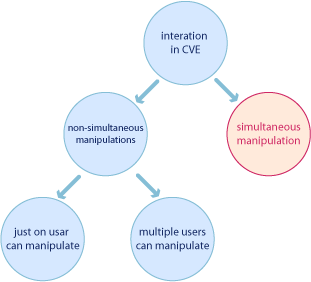

Interaction in virtual environments is an area of study

that has been developing in a remarkable way in recent years. As we can

see on a literature review, the interaction can be divided in control,

selection, manipulation and navigation. This last one can be divided in

traveling and wayfinding. The travel task is defined as the movement

and orientation of the user, and wayfinding is related to the

information presented by the environment with the objective to support

the user in his localization. This work deals with the evaluation of

wayfinding aid techniques in virtual environments that allow users to

navigate with high degree of freedom for traveling. The evaluation was

made through the development of applications that implement some of the

existing techniques and tests with groups of users, where each group

tested one of the chosen techniques. The test results have shown that

it is useful to use such techniques in this type of environment.

Related Papers:

BACIM, Felipe; TROMBETTA, André Benvenuti; PINHO,

Márcio Serolli. Avaliação de Técnicas de

Auxílio a Wayfinding em Ambientes Virtuais. In: IX Symposium on

Virtual and Augmented Reality, 2007, Petrópolis, RJ. IX

Symposium on Virtual and Augmented Reality, 2007. (PDF) |

|

This work consists of the study of techniques of

robotics and Virtual Reality (RV) to develop a simulator that can be

used in the robotics schools, having an adequate visualization and a

simple and intuitive way of interaction. For this a Virtual Environment

(AV) for robotics was developed, in language C++, using OpenGL.We

simulate the Scorbot ER-VII robot. RV resources have been

incorporated to improve the visualization and to facilitate the

interaction of the user with the program. The evaluation of the

environment was performed through the analysis of the results obtained

in the experiments carried out with users. Automatic reports for the

quantitative questions were generated, and questionnaires were

fulfilled for the qualitative questions. The results have shown that

the use of RV helps in the execution of the task, improving the

visualization by reducing the total time and increasing the precision.

See more details here |

|

The design of virtual environments for applications that

have several vlevels of scale has not been deeply addressed. In

particular, navigation in such environments is a significant problem.

This paper describes the design and evaluation of two navigation

techniques for multiscale virtual environments (MSVEs). Issues such as

spatial orientation and understanding were addressed in the design

process of the navigation techniques. The evaluation of the techniques

was done with two experimental and two control groups. The results show

that the techniques we designed were significantly better than the

control conditions with respect to the time for task completion and

accuracy.

See more

details here

Related Papers:

KOPPER, Regis; NI, Tao; BOWMAN, Doug; PINHO, Marcio

Serolli. Design and Evaluation of Navigation Techniques for Multiscale

Virtual Environments. In: IEEE VIRTUAL REALITY 2006, 2006,

Alexandria.IEEE Virtual Reality. IEEE Computer Society, 2006. p. 24-31. |

|

The SmallVR toolkit supports virtual reality

applications construction.

The main goal were to develop a toolkit that allows the rapid

development of virtual reality applications and to facilitate the port

of existing computer graphics applications to a virtual reality setup.

The toolkit supports the following issues: construction of scene graph,

view-culling techniques, geometric model loading, collision detection

and multiple screen rendering. The toolkit modeling uses an object

oriented language to facilitate the modification and upgrade tasks. The

SmallVR was built on the top of OpenGL and GLUT libraries.

See more details

here. |

|

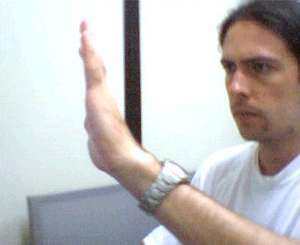

Currently, the use of Virtual Reality technology has

opened the possibility to use computers

with an interaction level superior to the traditional interfaces based

on keyboards and mouse.

This is achieved trough devices that allow to insert the users vitually

in a computer generated

environment. In this virtual environment the user can visualize and

manipulate, in three dimensions, virtual objects that are part of it,

with the possibility of interacting with them in a similar

way like the real world. The degree of interaction in virtual

environments is influenced by the capability of track certain parts of

body, such as the head, the hand or even the whole body. Also, it

is important that this environment enable the execution of certain

operations that allow a user interact with a virtual object in real

world fashion, providing the feeling that he or she is immersed

in another reality. Unfortunately, the cost of such equipments and the

amount of wires that need

to be attached to the user body, among others restrictions, limit the

use of Virtual Reality in

everyday life. This work aims to present an alternative to the position

and orientation tracker

hardware used to track the user hand. Its contribution is to use Image

Processing and Computer

Vision techniques to implement a video image based hand tracker. To do

so, the project is divided in three distinct phases. The first phase

detects the hand in an image through skin color

segmentation. In this phase, four skin segmentation algorithms are implement and many tests

are realized with two skin models and two color space. The second phase

calculates the hand

position and two algorithms are implemented and tested. In the third

phase, the hand orientation

is obtained through a Computer Vision technique called Image Moments.

After that, through the

contour hand analysis, some features are detected, such as fingertips,

valleys between the fingers

and the wrist. These features can be used to get the 3D hand position

and orientation. Along

this text each development phase, the techniques used, and its

respective results are described in

detail.

See more details

here

|

|

SharedMemoryLib,

a C library that provides an easy way to build a common memory page to

be shared between different process/programs.

See more

details here |

Cooperative manipulation refers to the simultaneous

manipulation of a virtual object by multiple users in an immersive

virtual environment (VE). In this work, we present techniques for

cooperative manipulation based on existing single-user techniques. We

discuss methods of combining simultaneous user actions, based on the

separation of degrees of freedom between two users, and the awareness

tools used to provide the necessary knowledge of the partner activities

during the cooperative interaction process. We also present a framework

for supporting the development of cooperative manipulation techniques,

which are based on rules for combining single user interaction

techniques. Finally, we report an evaluation of cooperative

manipulation scenarios, the results indicating that, in certain

situations, cooperative manipulation is more efficient and usable than

single-user manipulation.

See more details here.

Related Papers:

Pinho, M. S.; Bowman, D. A.; Freitas, C. M. D. S. Cooperative Object

Manipulation in Immersive Virtual Environments: Framework and

Techniques In. ACM VIRTUAL REALITY SOFTWARE AND TECHNOLOGY, 2002, Hong

Kong, p. 171-178

|

|

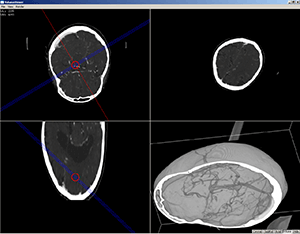

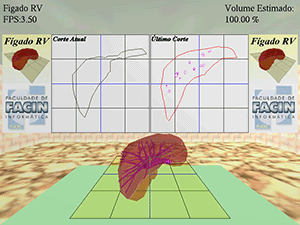

The main objective of this work is to help surgery

specialists in hepatectomy planning. This work proposes the development

of a Virtual Environment to help the planning of a hepatectomy surgery.

Some techniques of the modern medicine used to plan a hepatic surgery

are described, as well as the difficulties involved in the process. The

medical image acquisition methods and the generation of the virtual

model of a human liver techniques are briefly explained. Computer

graphics is presented as a useful tool to help medicine in areas such

as phobia treatments and teaching. The software will be developed using

computer graphics and virtual reality techniques combined, in order to

have an intuitive human-machine interface. It will show the virtual

liver, based on data from a real patient, as well as a cutting plane,

controlled by the user, helping to look for the adequate place to make

the section. The human-machine interface will be designed in a way the

surgeon does not need to be a computer expert.

See more detais here

|

|

In this work, a set of tests has been developed in

order to evaluate

the effectiveness of the use of touch sensations in virtual environment

simple tasks. The work focused on the model of interaction defined by

Doug Bowman which subdivides the manipulation process in four distinct

phases: selection, attachment, positioning e release. This design

choice allows us to analyze the very specific characteristics present

on each phase of the interaction process and for each of them seek for

appropriate ways to improve the interactive experience. From this

analysis, the type of tactile stimuli that should be generated in each

stage was defined, always having the goal of improving the execution of

the task.

See more details here

Related Papers:

KOPPER, Régis Augusto Poli; SANTOS, Mauro Cesar Charão

dos; PROCHNOW, Daniel; PINHO, Marcio Serolli; LIMA, Julio Cesar.

Projeto e Desenvolvimento de dispositivos de geração de

tato. In: VII SYMPOSIUM ON VIRTUAL REALITY, 2004, São

Paulo. 2004. v. 0, p. 65-75. (PDF)

|

|

This projetc have built a system to support the use of

Augmented Reality in out-door enviroments.

See more details here. |

The “Remote Memory” is a library to support

shared distributed memory for Collaborative Applications.

See more details here |

This work presents the design and development of a

robot movement

system using virtual reality devices, as a glove and a

three-dimensional position tracker. By using these devices the user can

make, with his hand, the movements he wishes and the robot will repeat

them. The system captures the user’s movements and translates

them to

robot commands. The system implements also a three-dimensional

environment simulation environment where many programming tests can be

made. See more details here.

|

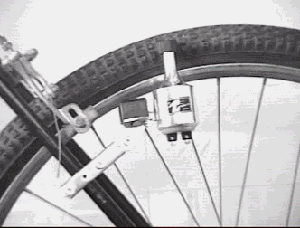

One of the most complicated tasks when working with three-dimensional

virtual worlds is the navigation process. Usually, this process

requires the use of buttons and key-sequences and the development of

interaction metaphors which frequently makes the interaction process

artificial and inefficient. In these environments, very simple tasks,

like look upward and downward can became extremely complicated. To

overcome these obstacles, this work presents an interaction model for

three-dimensional virtual worlds, based on the interpretation of the

natural gestures of a real user while he/she is walking in a real

world. This model is an example of a non-WIMP (Window, Icon, Menu,

Pointer) interface. To test this model we created a device named virtual-bike.

With this device, the user can navigate through the virtual environment

exactly as if he were riding a real bike.

(versão em

português)

See more details here

Related Papers:

PINHO, Marcio Serolli; DIAS, Leandro Luis; MOREIRA,

Carlos G. Antunes; KHODJAOGHLANIAN, Emmanuel González;

BECKER, Gustavo Pizzini; DUARTE, Lúcio Mauro. A User Interface

Model for Navigation in Virtual Environments. Cyberpsychology And

Behaviour Jornal, v. 5, n. 5, p. 443-449, 2002.

|

|

This project is developing software libraries to allows

easier

creation of immersive applications. Now we finished the following

libraries:

DXF file Reader - Download here

Polhemus Isotrack II driver - Download here

|

These project is developing an interaction panel which

can be used inside an immersive environment. The user can manipulate a

tridimensional panel using a real panel and a real pointer (like a

pencil). |

A system to manipulate virtual objects. It is

possible to move and

rotate the objects. This system was our first work in virtual

realitty. It uses a Powerglove to grab and move the virtual objects. |

|